Cheng-Shiu Chung1,2, MS, Matthew J. Hannan1,3, UG, Hongwu Wang1,2, PhD, Annmarie R. Kelleher1,2, PT, Rory A. Cooper1,2, PhD

1Human Engineering Research Laboratories, Department of Veterans Affairs, Pittsburgh, PA;

2Department of Rehabilitation Science and Technology, University of Pittsburgh, Pittsburgh, PA;

3College of General Studies, University of Pittsburgh, Pittsburgh, PA

ABSTRACT

It is difficult to evaluate which assistive robotic manipulator (ARM) and user interface would best meet users’ needs with no standardized measurement tool. In addition, evidence of outcomes can show the relative benefits of different technologies. Therefore, evidence of the effectiveness of an ARM and its user interface is necessary to demonstrate the efficacy of intervention. As no existing tool provides a standardized measure of ARM function, this pilot study was undertaken to create an adapted Wolf Motor Function Test for ARM (WMFT-ARM).

It identifies the basic understanding of the ARM user interface and accesses functional ability in complex daily tasks.

This adapted outcome instrument will allow clinicians to identify and evaluate ARMs and user interfaces that clients prefer and provide a way to quantify and qualify outcomes of their interventions.

INTRODUCTION

A long-term study has shown that ARMs increase user’s independence and reduced attendant care time (Bach, Zeelenberg, & Winter, 1990). Commercially available ARMs, such as the iARM by Exact Dynamics (Netherland) and the Jaco robotic arm by Kinova (Canada), can improve quality of life and assist in activities of daily living (ADL) (C.-S. Chung & Cooper, 2012) allowing opportunities for further independent living. The user interfaces of ARMs play an important role in performing ADL. A review article (C.-S. Chung, Wang, & Cooper, 2013) revealed the functional ability from commercial and developing user interfaces using International Classification of Functioning (ICF). However, the absence of standard performance evaluation tools makes it difficult to establish evidence-based practice for ARMs. It is also hard for clinicians to quantify the efficacy and effectiveness of their ARM intervention.

For the quantified performance evaluation, an exploratory study (Schuyler & Mahoney, 2000) evaluated user interfaces of a desktop-mounted ARM (UMI-RTX) with three standard occupational therapy assessment tests : Jebsen Hand Test, Block and Box Test, and Minnesota Rate of Manipulation Test. Although ARM users demonstrated significantly slower performance using all user interfaces than people with stroke or other disabilities, it was noted that participants were not able to complete any of the tests without the ARM. However, it is difficult to show the direct benefits of the ARM users from these test results. Alternatively, the ARM performance can be measured within a simulated control environment. A portable ADL task board (C. Chung, Wang, Kelleher, & Cooper, 2013) has demonstrated its usability for researchers to compare user interfaces, clinicians to train or evaluate, and suppliers to collect evidence during home evaluation. However, these assessment tools require specific standardized devices and objects.

In this paper, we introduce an Adapted Wolf Motor Function Test for ARM (WMFT-ARM), which uses daily objects that can be easily obtained. The WMFT-ARM will allow clinicians to identify and evaluate the ARM and user interface that clients prefer and provide a way to quantify and qualify outcomes of their interventions.

METHODOLOGY

Adapted Wolf Motor Function Test for Assistive Robotic Manipulators (WMFT-ARM)

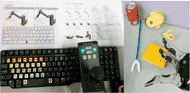

Figure 1, Left: ARM user interfaces and instructions; Right: The daily objects that can be used for the WMFT-ARM – soda can, mouth stick, lock or keyhole, key, and pad.

Figure 1, Left: ARM user interfaces and instructions; Right: The daily objects that can be used for the WMFT-ARM – soda can, mouth stick, lock or keyhole, key, and pad.The WMFT-ARM is adapted from Wolf Motor Function Test (WMFT). WMFT is a clinically reliable and valid measurement tool that involves timed function tasks associated with ADL for upper extremity performance evaluation (Wolf et al., 2001). The original WMFT (Taub, Morris, Crago, & King, 2011) contains 17 tasks. However, while adapting to ARM usage, some tasks are not applicable to the configuration and capability of the ARMs. For example, the task of folding towels requires two hands moving synchronously. In addition, some objects such as pencils can be replaced by mouth sticks if the ARM user does not have fine muscle movement to control a pen or pencil and paper clips can be replaced as keys with/without key adaptors. The testing procedures were established based on the tasks listed in Table 1. Table 1 identifies the task, setup for the task, the specific task itself, and verbal instructions for completion of the task.

Task Item |

Setup |

Task |

Verbal Instructions |

|---|---|---|---|

1. Robot hand to table |

The ARM is in home position away from the pad |

Move robotic hand to the pad |

On “Go”, move robotic hand to the pad |

2.Hand to box (top) |

The ARM is resting on the touchpad and the box is positioned to the right of it on top of the table |

Move robotic hand to top of box |

On “Go”, position the hand from the start point to the top of the box |

3.Weight (12oz beverage) to top of box |

Beverage is grasped with the ARM while resting on the pad with a box to the right of it |

To place the 12oz beverage on top of the box to the right of it |

On “Go”, place the 12oz beverage on top of the box |

4.Position beverage to mouth |

Beverage is grasped with the ARM while resting on top of touch-pad |

To position the 12oz beverage 1” in front of the subjects mouth |

On "Go", position the 12oz beverage to your mouth |

5.Lift mouth stick |

Position the mouth stick on the touch pad while the ARM is in home position |

To lift the mouth stick from the touch-pad |

On "Go", lift the mouth stick from the touch-pad |

6.Lift up key |

Place on the table in front of the ARM while the ARM is in home position |

Lift the key from the table |

On "Go", lift the key from the table |

7.Turn key in lock |

With the key in the lock, the ARM positioned directly on top of the key ready to grasp it |

Grasp the key, turn it clockwise 90˚, then counter-clockwise 90˚ |

On "Go", grasp the key, turn it 90˚ clockwise, then 90˚ counter-clockwise |

8.Lift Basket |

The basket will be placed on a surface lower than the table and to the right, with the ARM in home position |

Lift the basket from the lowered position and place it onto the table directly in front of the ARM base |

On "Go", pick up the basket, and place it on the table in front of the ARM |

Intra-rater Reliability

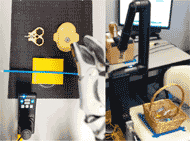

Figure 2, Left: Setup for pick up mouth stick; Right: Setup for lift the basket onto an elevated surface

Figure 2, Left: Setup for pick up mouth stick; Right: Setup for lift the basket onto an elevated surfaceWe examined the reliability of the WMFT-ARM with a trained rater. All the tasks were pre-programmed on the ARM in order for standardization in timing from start to completion of the designated tasks. A trained rater administrated the WMFT-ARM and a researcher executed the pre-programmed trajectories. The ARM trajectory data were recorded as a comparison in completion time. We performed the WMFT-ARM three times. Two tests were performed on the same day with four hours in between and the other one was on a different day with eight days in between.

We analyzed the data using Bland-Altman plot (Figure 3) to demonstrate the differences from ARM data and the rater. Excellent Bland-Altman plot agreement indicates that the ratings are reliable and valid compared to the criterion measures.

Test Protocol

The study was conducted in a controlled environment at the Human Engineering Research Laboratories (HERL). The study protocol was approved by the Institutional Review Board (IRB) of the University of Pittsburgh. After informed consent was obtained, it was verified that each subject met the inclusion and exclusion criteria. A questionnaire was then collected regarding the use of assistive technology and disability of the arm, shoulder, and hand (QuickDASH) (Beaton, Wright, & Katz, 2005). The WMFT-ARM was conducted. Each task took approximately 2-10 minutes to complete and we allotted up to one hour for these activities. If the time exceeded 10 minutes or the subject expressed frustration, the task was terminated. It was not considered a protocol deviation if the participant was unable to complete all tasks in the given time period. Time to complete each task and the trajectory of the robot were recorded. Following, completion of the test, a brief questionnaire and open interview related to use of the interfaces was conducted by a study investigator.

The participants were 18 years older used a powered wheelchair as their primary means of mobility, and were able to operate a computer keyboard. Participants with active pelvic, gluteal, thigh wounds, pressure ulcer in these regions within the past 30 days were excluded due to prolonged sitting in this study. Participants with a significant cognitive disability that precluded them from providing informed consent were excluded.

Two ARMs with their original user interfaces were utilized in this study. The JACO robotic arm utilizes a 3-axis joystick interface with a different variety of mode selection buttons, while the iARM robotic arm uses a 4x4 keypad to control the device.

RESULTS AND DISCUSSION

In this pilot study, four male electric powered wheelchair users (age: 40.8±18.3) were recruited, as shown in Table 2. The QuickDASH scores indicate that the participants 1-3 have moderate to severe upper extremity impairment (e.g., reaching, grasping, holding things, using computer mouse, etc.). Due to the disability in hand dexterity of the first two participants, we used 2-axis mode for joystick instead of the original 3-axis mode. However, no participant had difficulty in using the keyboard. During the testing, Participants 1 and 2 expressed hand and arm fatigue in pressing the rubber button of the joystick interface and required for a 10 minute break.

| Participant ID | Gender | Age | QuickDASH | User Interface* |

|---|---|---|---|---|

| 1 | Male |

27 |

70.5 |

KB, 2-axis JS |

| 2 | Male |

23 |

68.2 |

KB, 2-axis JS |

| 3 | Male |

57 |

52.3 |

KB, 3-axis JS |

| 4 | Male |

56 |

36.4 |

KB, 3-axis JS |

| *KB: Keyboard, JS: Joystick | ||||

Table 3 and Figure 4 show the completion time of each task item in the WMFT-ARM version. The average task completion time of the joystick user interface was smaller in most of the tasks. However, in task 6 (pick up key), the keyboard performed faster on average than the joystick. This might be because users spent more time switching modes to adjust to the desired hand position for picking up the key. Both user interfaces showed very high functional ability score in simple tasks and relatively low score in the difficult tasks.

Task Item |

Joystick |

Keyboard |

||

|---|---|---|---|---|

Completion Time |

Functional Ability |

Completion Time |

Functional Ability |

|

1 |

9.19±3.57 |

5.0±0.0 |

15.28±1.54 |

4.5±1.0 |

2 |

8.42±3.15 |

5.0±0.0 |

35.36±28.09 |

4.5±1.0 |

3 |

28.71±19.95 |

4.8±0.5 |

60.55±59.82 |

4.3±1.3 |

4 |

30.83±25.04 |

4.5±1.0 |

103.17±83.54 |

4.0±1.2 |

5 |

73.39±29.85 |

4.0±0.8 |

172.41±154.19 |

3.5±1.7 |

6 |

233.07±164.48 |

3.0±1.8 |

156.77±111.45 |

3.3±1.5 |

7 |

32.30±6.71 |

5.0±0.0 |

46.21±9.20 |

4.0±2.0 |

8 |

30.74±4.55 |

4.7±0.6 |

75.38±31.05 |

4.5±0.6 |

During the testing, we noticed that when using keyboard user interface, for the first several tasks, the users frequently visually verify the symbols on the keyboard and moving of the ARM. However, the frequency of visual verification was reduced in the later tasks. This phenomenon indicates that the users have memorized the key’s location and built up the motor automaticity. In the first four tasks, most users only used translation motion to complete the task. In the task 5 and 6, mode switching between translation and rotation slows the joystick interface. However, in task 7, with a single rotation, the proportional control of the joystick interface can accelerate the ARM to the desired rotational speed faster.

In the open interview, the participants liked the “long reach, capability to reach and grasp, and providing independence.” The items that participants liked least were the cost and added size of the ARM when not in use. What the participants would do differently if redesigning the ARM is to “allow it to lift more weight.” The places they would not be willing to use ARM are the quiet places like a church or library.

Study Limitation

As noted, the sample size is small so that it is difficult to generalize the performance differences found in the two user interfaces. Although the keyboard and joystick can both be applied to the two ARMs, we only tested with their original interfaces. In addition, in order to record the robot data of the iARM, we used a standard sized keyboard which has similar size to the original 4x4 keypad but with different key locations. The outcome might be slightly different when using the 4x4 keypad.

CONCLUSION

Standardized measurement tools help clinicians to guide their practice, assess their intervention outcome, and provide evidence for policy-makers and funding sources. The WMFT-ARM provides clinicians with a method to quantify and qualify outcomes of their intervention on an ARM and its user interface.

By testing WMFT-ARM with two ARM systems with their original user interfaces, the quantified and qualified performance allows clinicians to determine which user interface would be easier and more beneficial to the clients, as well as which control setting performs better.

This WMFT-ARM provides an essential and unique component of performance evaluation that will contribute to a comprehensive approach that is necessary in the field of assistive robotic technology. This standardized test may help clinicians and researchers to build up clinical evidence in making better decisions when prescribing this assistive technology to the clients. The tool will potentially facilitate evaluation and comparison with studies of the efficacy and effectiveness of new user interfaces that are introduced into the marketplace, as well as other research applications.

REFERENCES

Bach, J. R., Zeelenberg, A. P., & Winter, C. (1990). Wheelchair-mounted robot manipulators. Long term use by patients with Duchenne muscular dystrophy. American journal of physical medicine rehabilitation Association of Academic Physiatrists, 69(2), 55–59. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/12212433

Beaton, D. E., Wright, J. G., & Katz, J. N. (2005). Development of the QuickDASH: comparison of three item-reduction approaches. The Journal of bone and joint surgery. American volume, 87(5), 1038–46. doi:10.2106/JBJS.D.02060

Chung, C., Wang, H., Kelleher, A., & Cooper, R. A. (2013). Development of a Standardized Performance Evaluation ADL Task Board for Assistive Robotic Manipulators. In Proceedings of the Rehabilitation Engineering and Assistive Technology Society of North America Conference. Seattle, WA.

Chung, C.-S., & Cooper, R. A. (2012). Literature Review of Wheelchair-Mounted Robotic Manipulation: User Interface and End-user Evaluation. In RESNA Annual Conference.

Chung, C.-S., Wang, H., & Cooper, R. A. (2013). Functional assessment and performance evaluation for assistive robotic manipulators: Literature review. The journal of spinal cord medicine, 36(4), 273–89. doi:10.1179/2045772313Y.0000000132

Schuyler, J. L., & Mahoney, R. M. (2000). Assessing Human-Robotic Performance for Vocational Placement. IEEE transactions on rehabilitation engineering : a publication of the IEEE Engineering in Medicine and Biology Society, 8(3), 394–404. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/11001519

Taub, E., Morris, D., Crago, J., & King, D. (2011). Wolf Motor Function Test (WMFT) Manual (pp. 1–31). Retrieved from http://www.uab.edu/citherapy/images/pdf_files/CIT_Training_WMFT_Manual.pdf

Wolf, S. L., Catlin, P. a., Ellis, M., Archer, a. L., Morgan, B., & Piacentino, a. (2001). Assessing Wolf Motor Function Test as Outcome Measure for Research in Patients After Stroke. Stroke, 32(7), 1635–1639. doi:10.1161/01.STR.32.7.1635

ACKNOWLEDGEMENTS

This work is funded by the ASPIRE Grant #1262670 and by Quality of Life Technology Engineering Research Center, National Science Foundation (Grant #0540865). The work is also supported by the Human Engineering Research Laboratories, VA Pittsburgh Healthcare System. The contents do not represent the views of the Department of Veterans Affairs or the United States Government.

Audio Version PDF Version